What is GPTQ & GGML?

Talking with friends in Discord and just my general after-work shower-thoughts tend to provoke ideas for me to talk about. This time, overthinking processes took over and I went down a rabbit hole learning yet another batch of things revolving around AI - Specifically revolving around GPTQ and GGML.

"In recent years, AI and machine learning have come a long way, with breakthroughs powered by advanced models like GPT-4 and the older GPT-3. But often, the real innovation isn't just in the models themselves—it's in the tools and techniques we use to fine-tune and optimize them for real-world use."

Two such techniques that have been getting a lot of attention in the AI community are GPTQ and GGML. Both are methods for managing machine learning models more efficiently, but they have different applications and strengths.

In this post, we will break down what GPTQ (Generalized Quantization) and GGML (Generalized Graph-based Memory Learning) are, how they differ, and their primary uses in machine learning workflows. Whether you're an AI researcher, a developer, or a machine learning enthusiast, understanding these methods will help you make better choices when optimizing AI models for performance and efficiency.

What is GPTQ?

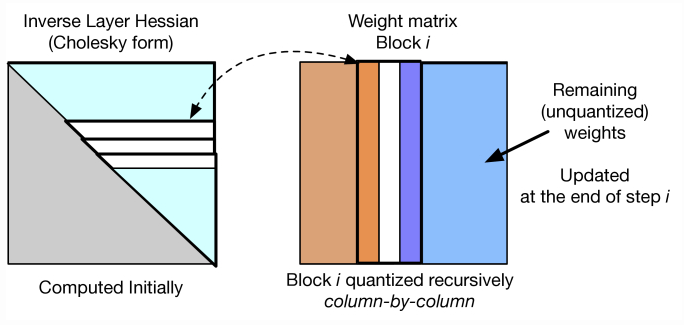

GPTQ (Generalized Quantization) is a model compression technique primarily focused on quantizing the parameters of large language models (LLMs). Quantization, within the context of all of this, refers to reducing the precision of a model's parameters (i.e. weights) to decrease memory usage and computational load without significantly sacrificing accuracy. By transforming the floating-point weights into lower bit precision (such as 8-bit or 4-bit), GPTQ makes it easier and faster to deploy these models on hardware with limited resources like edge devices or GPUs with lower memory capacities.

Common Uses of GPTQ:

- Compression for Deployment: GPTQ is often used in distributed computing systems or on edge devices where memory and computational power are limited. The goal is to make models lighter and faster without requiring significant hardware upgrades.

- Speed Optimization: GPTQ can reduce the amount of data transferred between components, speeding up the inference phase of a model and thus improving the response time.

- Energy Efficiency: By reducing the precision of operations and memory accesses, GPTQ also helps reduce the energy consumption of large models, which is essential for deploying AI in energy-constrained environments.

"GPTQ is a quantization technique designed to optimize machine learning models by reducing memory usage and improving performance, making it ideal for deploying large models in resource-constrained environments. It is commonly used for optimizing models like GPT-based architectures for faster inference and lower computational costs."

For more information on GPTQ and how to actually work with it + install it, visit this PyPi (or click the link in the notation below) to get yourself started. Head's up, it requires PyTorch.

NOTE: "GPTQ requires PyTorch and a GPU, and installing PyTorch with CUDA is tricky." Instructions: https://pypi.org/project/gptq/

What is GGML?

On the other hand, GGML (Generalized Graph-based Memory Learning) is a more advanced technique related to memory efficiency and structure. GGML leverages a graph-based approach to represent the learning processes and memory operations of machine learning models. It is designed to optimize dynamic memory allocation and data access patterns, making it particularly useful for models that need to handle large datasets or involve complex, multi-layered neural networks.

- Low-level cross-platform implementation.

- Integer quantization support.

- Broad hardware support.

- Automatic differentiation.

- ADAM and L-BFGS optimizers.

- No third-party dependencies.

- Zero memory allocations during runtime.

"GGML is a tensor library for machine learning to enable large models and high performance on commodity hardware. It is used by llama.cpp and whisper.cpp" - From ggml.ai

Common Uses of GGML:

- Memory Management in Large Models: GGML is applied in scenarios where models need to store and retrieve massive amounts of data efficiently. By structuring memory access in a graph-based system, GGML enhances the management of multiple training states and model parameters.

- Complex Networks: GGML is ideal for applications where the learning process involves highly interconnected layers or graph-based structures, such as in graph neural networks (GNNs) or reinforcement learning.

- Long-Term Memory: One of the notable aspects of GGML is its ability to track long-term dependencies across training sessions. This can improve the model's performance when dealing with tasks like sequence prediction or time-series forecasting, where previous data needs to be retained for future learning.

Key Differences Between GPTQ and GGML

While both GPTQ and GGML focus on optimizing machine learning models, their goals, techniques, and applications diverge in significant ways.

1. Focus of Optimization:

- GPTQ: The primary focus of GPTQ is model size and efficiency. It reduces the precision of the model's weights, thereby optimizing the model for memory usage, speed, and power consumption. It's best suited for scenarios where you need to deploy large models in resource-constrained environments.

- GGML: GGML, on the other hand, is focused on memory management and optimizing data storage and retrieval during the learning process. This makes it ideal for situations where complex data dependencies or large memory states are involved.

2. Use Cases:

- GPTQ: Commonly used for compressing large language models, such as GPT-based architectures, to enable deployment on devices or systems with limited resources. It works well for large-scale applications that need to balance between efficiency and performance.

- GGML: This technique is better suited for graph-based learning tasks, such as reinforcement learning, GNNs, or applications requiring long-term memory retention and efficient retrieval of complex datasets. It's beneficial in scenarios where model complexity and data interdependence play crucial roles.

3. Techniques:

- GPTQ: GPTQ uses quantization techniques to reduce the bit-width of model weights, often converting them from floating-point to integer precision (such as 8-bit or 4-bit). This reduces memory requirements and speeds up computations.

- GGML: GGML employs graph-based memory structures that optimize the way a model accesses and stores data, improving both short-term and long-term memory performance. This is more about improving the data flow and memory access patterns rather than focusing solely on model size.

4. Performance Implications:

- GPTQ: By reducing precision, GPTQ can sometimes lead to minor degradation in model accuracy, especially if the quantization process is too aggressive. However, this is generally a trade-off that provides large benefits in terms of performance and memory efficiency.

- GGML: GGML has a minimal impact on model accuracy because it is focused on improving memory operations and data flow rather than directly altering model parameters. Its effectiveness is more evident in applications requiring complex memory management, where maintaining long-term data dependencies is crucial.

5. Deployment:

- GPTQ: GPTQ is more suited for deployment on edge devices, GPUs, and in scenarios where high-speed inference is needed without compromising too much on accuracy. This makes it ideal for real-time applications.

- GGML: GGML, due to its complexity and focus on memory operations, is often used in research-heavy environments or large-scale AI systems where memory management and long-term learning are more important than real-time inference.

"GPTQ and GGML are both important tools for improving AI models, but they serve different purposes in the machine learning world. "

The Wrap Up:

GPTQ is all about quantizing model weights, which helps save memory and boost speed—making it a great choice for situations where resources are limited, such as deploying large language models (like GPT-3 or GPT-4) for real-time applications in devices or systems with less computational power. GGML, on the other hand, focuses on graph-based memory management, optimizing how a model stores and accesses data over time. This is especially helpful for complex tasks that require handling long-term dependencies and managing large amounts of data, such as in graph neural networks (GNNs) or reinforcement learning systems.

When deciding between the two, think about your specific needs. If you're working with large language models that need to be deployed efficiently in resource-constrained environments, GPTQ is probably the way to go. But if your task involves handling intricate data dependencies or memory-heavy processes, GGML could be the better fit. Both techniques are powerful, but the right one really depends on the type of project you're tackling and the specific challenges you're facing.

If you ever have questions about anything LLM related, I like talking about this stuff. You can contact me through my About Page or email me directly at [email protected]!

☄️Footnotes: Explain Like I'm 5!

GPTQ: Imagine your robot has a big brain, but it's too big and slow to fit in a tiny robot, like the one in your phone. GPTQ is like taking away some of the little details in the brain to make it smaller and faster, so it can fit in the little robot. It doesn't lose much smarts, but it works much quicker and doesn't take up too much space.

GGML: It's like helping the robot organize its thoughts and memories better. When the robot needs to remember lots of things over time (like a list of steps in a game), GGML helps it remember those things without getting confused. It's like making a better filing system for the robot's brain, so it can find and use memories when it needs them!

The Big Differences:

- GPTQ helps make the robot’s brain smaller and faster, like shrinking a big picture so it can fit in a small frame.

- GGML helps the robot organize and store its memories better, like putting things in labeled boxes so the robot can find them easily later.

Member discussion